Trump Paves Away Biden's Executive Order on AI

With the Democratic party unlikely to win this Presidential election, AI regulation faces GOP opposition, led by Trump's criticism of Biden's AI Executive Order.

Now that Biden’s out of the US Presidential race for good, it seems more than likely that there will be a Republican victory. This coincides with a precipitous turning point in AI legislation. Just last year, Biden unveiled the AI Executive Order, but it seems like the Democratic Party may not be the ones to carry it out, unless Kamala Harris wins the Presidential vote.

Trump has pledged to cancel Biden's AI executive order, claiming that it infringes on free speech and alleging it was used by Homeland Security to censor political speech. He spoke about banning the use of AI to censor the speech of American citizens on day one, which might have huge repercussions for social media. He has made similar attacks on the Biden Administration’s TikTok ban, too. This promise forms part of Trump's broader campaign to roll back various policies implemented during Biden's tenure.

On October 30, 2023, the Biden Administration's AI Executive Order was announced, outlining requirements when it comes to AI's impact on jobs, ensure AI-generated content is identifiable, and assessing the safety of AI algorithms. Generally, it will require companies to share safety test results, emphasizing fairness and transparency, and promoting innovation while mitigating risks—especially in housing and healthcare. All of this is part of increased oversight for AI companies and a push towards responsible AI development practices, but may not make it, unless the Democratic Party can put Harris in the Presidential seat.

Vice President Harris announced key AI initiatives at the UK Global Summit on AI Safety. These include the United States AI Safety Institute (US AISI) within NIST for AI risk management, draft policy guidance from the Office of Management and Budget to enhance AI transparency and accountability in federal agencies, and a Political Declaration on Responsible Military AI backed by 31 nations. Additionally, a new funders initiative has secured over $200 million in philanthropic support.

Still, as detailed in an article I wrote, there are major gaps in the AI Executive Order that need to be addressed. Yet, it's concerning that these regulations, which may not fully address the issues arising from unchecked AI applications, could be blocked from becoming law by a Trump administration. Furthermore, the Order omits details about granular stages of AI model development. It doesn’t really cover sustainability, either. I wish to see the AI Executive Order pose some kind of check or restriction when it comes to the military. At the same time, the USA’s economy is made up largely of selling and developing weapons.

The Pentagon’s involvement will continue to drive up the profits of several large-scale arms manufacturing companies. This includes Lockheed Martin, Boeing, and Northrop Grumman. Greater military investment in AI stands to benefit tech companies that already contract with the Pentagon, such as Anduril, Palantir and Scale. Key executives at those companies have supported Trump and have close ties to the GOP.

The stakeholders of AI today will leave the lands barren, the animals dead, and the people impoverished. China has already implemented sweeping social credit systems, while quantifying every citizen's behavior through opaque algorithms and ubiquitous surveillance. Western tech companies and governments are catching up to this. Consider the likes of Palantir, Clearview, UK police forces, and the Israeli Defense Forces—all of them are in a hurry to scale up algorithms that, in my view, threaten to regress us to the dark ages.

It isn’t enough to consider AI as equitable or fair only in terms of its impact on people and not on the environment. Sustainability is the intersection of social and environmental vectors. Many US governmental agencies, especially law enforcement, have engaged in highly questionable uses of AI. Given the energy grids and monitoring mechanisms in place in many countries, it would be challenging for large-scale operations to remain undetected due to their massive energy consumption. Read my letter from November to learn more about the concerns with the order.

Silicon Valley has shown mixed reactions to Biden's AI policies. While some tech leaders feel Biden’s approach to AI regulation aligns with responsible development, others view the policies as overreaching and potentially stifling innovation. The executive order is seen as lacking enforceability without Congressional support

The FDA (Food and Drug Administration), under the leadership of Robert Califf, faces significant challenges in regulating AI in healthcare. Califf emphasized the need for more resources and staff to monitor AI effectively, highlighting the complexities of regulating constantly evolving AI tools.

Elon Musk has endorsed former president Trump, but his AI chatbot Grok appears to contradict this stance, at least at times. Grok has been seen promoting negative claims about Trump, referring to him as "Psycho" and surfacing posts describing him as "a pedophile" and "a wannabe dictator." The chatbot's responses regarding Kamala Harris are mixed, sometimes positive but also surfacing or appearing to invent racist tropes. Grok's outputs often include problematic content, conspiracy theories, and biased hashtags, despite being integrated into Musk's X platform. This discrepancy between Musk's political stance and Grok's responses highlights the challenges of maintaining political neutrality in AI systems, especially those with real-time access to social media data. In a previous letter, I explored problems with these AI chatbots in some detail, although Grok is a very interesting case study in itself. It’s kind of a Frankenstein’s Monster situation, wherein it revolts against Musk’s political and personal agenda.

While Silicon Valley is historically known for its Democratic leanings, there is a growing faction within the tech community that supports Trump, attracted by his friendliness toward emerging technologies like cryptocurrency and AI. This support is still relatively small compared to the broader Democratic support in the region. Notable Silicon Valley figures such as venture capitalists David Sacks and Chamath Palihapitiya have hosted fundraisers for Trump, signaling a shift among some tech leaders towards supporting Trump's policies. Despite this, the majority of Silicon Valley remains supportive of Biden and his administration's focus on innovation and entrepreneurship.

AI is increasingly used for diagnosing diseases and managing patient records. Despite its potential benefits, concerns about safety, accuracy, and racial biases in AI algorithms persist among developers and healthcare professionals.

The tech industry and regulators have clashed over AI oversight. The FDA’s guidance on AI in medical devices has faced backlash from tech firms for perceived overreach, while there are calls for clearer regulatory frameworks to ensure AI safety and effectiveness without stifling innovation.

Over the past 4 years alone, there has been a slew of firings when it comes to ethical AI teams in Big Tech. Timnit Gebru was fired from Google's ethical AI team after conflict over research paper. Next was Margaret Mitchell, co-author of the paper, also fired from Google's ethical AI team. Since then, Microsoft has laid off the entire ethics and society team within the AI division, and Twitter (X) eliminated its ethical AI team in a wave of major layoffs under Musk

The intersection of AI policy and political endorsements highlights the complex landscape of regulating advanced technologies while balancing innovation, safety, and ethical considerations. Both Biden’s and Trump’s approaches to AI regulation underscore different priorities and challenges in managing this rapidly evolving field, with Silicon Valley playing a pivotal role in shaping the national agenda on innovation and technology. It’s time for us to redirect resources to applications of AI that serve sustainable and social needs, rather than those of corporations or political purposes. Regulation is one way to achieve this, but it is incomplete and, to some extent, misplaced. After all, Big Tech can afford the fines and directly lobby for regulation, especially in countries like the US and China. Furthermore, the way this regulation is enforced, for example, by measuring or auditing for bias or privacy risks, can be subject to different interpretations. That’s why enabling people and organizations with knowledge instead of mandates is a better approach in this space of emerging technology. Read on to learn more about AI regulation in Europe, globally, and to explore broader aspects of ethical and responsible AI.

The EU AI Act Is Ready, But Are We?

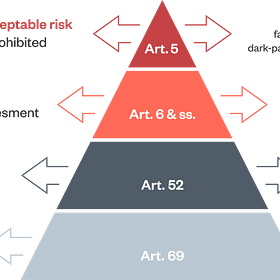

The European Union (EU) has officially approved the Artificial Intelligence Act. The regulation was endorsed by MEPs with 523 votes in favour, 46 against and 49 abstentions. This new law will ban certain AI applications, strictly regulate high-risk uses, and ensure transparency and stress-testing for advanced software models. While the framework was fir…

Artificial Intelligence: Policy and Legal Review (2023)

Over the past five years, government scrutiny of Artificial Intelligence (AI) has reached the point of global regulatory action. A volley of AI-focused laws and regulations has been unleashed. Among these are the EU Artificial Intelligence Act (AI Act), and the proposed US AI Bill of Rights.

Embodying Responsible AI to Save The World

It’s been a while since I last wrote here, and this one may be a slightly different kind of newsletter. At some point, it feels natural to talk about the experiences that have occupied my focus for the past few years. Essentially, I’m driven by anxiety for the future. My fear is that AI, capitalism, and government overreach are the new age horsemen of t…

Sources

Trump unveils executive order to regulate AI in military. The Washington Post. Retrieved from https://www.washingtonpost.com/technology/2024/07/16/trump-ai-executive-order-regulations-military/

Trump vows to cancel Biden executive order on AI to protect free speech. Washington Examiner. Retrieved from https://www.washingtonexaminer.com/news/2432277/trump-vows-to-cancel-biden-executive-order-on-ai-to-protect-free-speech

Biden’s AI executive order slams small entities. Washington Examiner. Retrieved from https://www.washingtonexaminer.com/opinion/2439063/bidens-ai-executive-order-slams-small-entities

Trump’s Truth Social: How the conservative social media platform works. Fast Company. Retrieved from https://www.fastcompany.com/91069814/trump-truth-social-conservative-social-media-platform-public-how-it-works

Biden’s unconstitutional AI order. Washington Examiner. Retrieved from https://www.washingtonexaminer.com/opinion/beltway-confidential/2743122/bidens-unconstitutional-ai-order

Redesigning New York's hidden public spaces to create a more resilient city. Fast Company. Retrieved from https://www.fastcompany.com/3017767/redesigning-new-yorks-hidden-public-spaces-to-create-a-more-resilient-city

Silicon Valley’s growing support for Trump. Financial Times. Retrieved from https://www.ft.com/content/e2ffd807-1c18-436c-9f70-2fa7181ace2a

Artificial intelligence in health care: The FDA’s new approach. Politico. Retrieved from https://www.politico.com/news/2024/02/18/artificial-intelligence-health-care-fda-00141768

AI and doctors: The healthcare regulation debate. Politico. Retrieved from https://www.politico.com/news/2023/10/28/ai-doctors-healthcare-regulation-00124051

Biden and Trump’s AI policies and their impact on Silicon Valley. Fast Company. Retrieved from https://www.fastcompany.com/91158381/biden-trump-ai-policies-silicon-valley

Trump’s FDA commissioner on regulating AI. Politico Future Pulse. Retrieved from https://www.politico.com/newsletters/future-pulse/2024/07/16/trumps-fda-commissioner-on-regulating-ai-00168576

Elon Musk’s endorsement of Trump and the AI chatbot Grok. Wired. Retrieved from https://www.wired.com/story/elon-musk-is-all-in-on-endorsing-trump-his-ai-chatbot-grok-is-not/