The Egregious Rise of AI-Surveillance Technology

Whether in the West or in China, the rise of surveillance technology, in the form of facial, biometric and lip-reading technology, pushes us closer to a dystopian reality.

Recent advancements in Artificial Intelligence (AI) have made facial surveillance a viable tool for both corporations and law enforcement agencies. Facial recognition, biometric scanning, and lip-reading software, have become regular in our daily lives. From unlocking our smartphones to tracking our movements in public spaces, surveillance technology has become a pervasive presence. It’s capable of scanning faces in real-time, matching them with a database of images, and even predicting someone's age, gender, and mood with startling accuracy. Meanwhile, biometric tech can identify individuals based on their unique physical or behavioral characteristics, such as fingerprints, iris scans, or gait patterns. Lip-reading tech can analyze speech and lip movements to reconstruct conversations or identify speakers, even in noisy environments. Additionally, devices known as “WiFi sniffers” and “IMSI catchers” have been used by police to track a target’s movement, using information from phones in the vicinity. All of this raises serious questions about our fundamental rights as consumers, as citizens, as human beings. In this newsletter, we will explore the rise of facial, biometric, and lip-reading technology- how these technologies are used to breach liberties and invade privacy.

A report from Big Brother Watch notes that even though this tech is improving, the error rates for facial recognition algorithms are high. Numerous systems used by law enforcement agencies misidentify people more than 90% of the time. In the United Kingdom, the Humans Right Act of 1998 mandates that the right to a private life, found in Article 8, is only allowed to be interfered with if the action is both “necessary and proportionate”. Given these high failure rates and the lack of evidence showing that these approaches actually crack down on severe crime, this condition for Human Rights is not met. The tech is useful simply because they help groups achieve target metrics. This, however, begs the question about how these metrics are chosen in the first place. The effect of these systems is not a reduction in crime, but a disruption in civil life and liberties. Even these surveillance technologies with notoriously poor performance have been installed into public areas, largely in London and South Wales. In the USA, similar technologies have emerged in Boston, while some states, such as California, have been banning these technologies for law enforcement. Across the globe, this tech has become commonplace in Russia, India, Iran, the United Arab Emirates and Singapore. But according to The Times, around half of the world’s nearly one billion surveillance cameras are to be found in China. This figure of a billion devices will only increase. After all, they get better with more training data, even if it makes life worse for many innocent people.

To illustrate to you just how deep this problem is, it is worth having a recap of the various controversies that have occurred over the past 4 years alone. As mentioned earlier, in 2019, the city of San Francisco banned the use of facial recognition tech by the police and other departments, due to concerns about privacy violations and the potential for the tech to be misused. The city of Boston also imposed a moratorium on the use of facial recognition tech by the police. 2020 was a particularly strong year for violations of civil liberties. The New York-based company Clearview AI was revealed to have collected billions of photos from the internet, including social media sites, to develop a facial recognition database. This raised public discourse around privacy, data security, and the potential for the tech to be misused for law enforcement or commercial purposes. The Detroit Police Department used facial recognition technology to arrest Robert Williams, an African American man, for a crime he did not commit. The technology produced a false positive, leading to his wrongful arrest and the violation of his rights. There are a number of other cases of Black men being falsely arrested due to facial recognition, as well as tracking the behaviours and patterns of Black Lives Matter protesters. That same year, the FBI was using a facial recognition database that contained millions of photos, including those of people who had not been convicted of a crime. This demonstrates the high risk of the tech to be misused for political purposes. In 2021, Amazon was criticized for selling its facial recognition technology, Rekognition, to law enforcement agencies, leading to concerns about the potential for the technology to be used for mass surveillance and racial profiling from Civil Rights unions.

There are always parallels to be found in between the technocultures of China and the West. Due to China’s more direct relationship between the state and private companies, the country is much quicker to adopt new technologies en-masse. This is especially true of policies and implementations that provide the CCP’s regime a closer look into the minds and behaviours of its citizens. Police forces in China are collecting voice prints from microphones attached to cameras. In Zhongshan, law officials requested devices that could record audio from at least a 300-foot radius. Such data gets added to a database, for the sole purpose of identifying suspects faster. The CCP’s media mouthpiece boasted over Twitter that the country’s facial recognition tech could scan the faces of China’s 1.4 billion citizens in one second.

What may be more alarming is the sheer scale and functionality of software used for surveillance, in the form of an app called WeChat, which collects even more granular data from each of its 1.3 billion users. German journalist Kai Strittmatter discusses WeChat in various interviews, commenting that “It started as a normal chat program like WhatsApp, but very soon it turned into a kind of Chinese Facebook. Then it became a Chinese Uber. You could get credit, you could apply for credit to your bank with it. You could use it as an ID. You could file your divorce papers through this app to the local court, and you can do all your financial transactions through this app. And that works with bar codes. And they've been using these bar codes for a long time already”. As a form of population control, the CCP uses this information for a social credit system. Someone could be blacklisted, due to certain behaviours or legal violations, even for infractions as minor as having overdue library books. They may be denied access to travel, such as flying (with at least 17 million people having experienced this already), or may be banned from having their children enter certain schools. In Shenzhen, often called the “Silicone Valley” of China, there are various cameras and billboard systems, that may display the face of jaywalkers in the area, along with their name and ID number. It is an intimidating and terrifying power move by the CCP, a form of public shaming, that makes me feel like all of the warnings of dystopian sci-fi have at last come to fruition. Given the attempts of law officials and government institutions to use this tech even in the West, such as the US and UK, it feels like perhaps China is just our society but ten years ahead. After all, data brokers do regulate things like our career opportunities, voting outcomes, insurance plans and financial stability. Even in comparison to the West, however, the situation in China is dire. In 2019, the Chinese government launched a massive facial recognition program called Skynet, aimed at enhancing public security and cracking down on crime. The system has been criticized for violating citizens' privacy rights. The name Skynet should rightly conjure images of dangerous androids breaking down doors to kill you. After all, this is partly what has already happened, for groups such as Hong Kong protesters and Uighur Muslims.

One Chinese company, Megvii was sanctioned by the U.S. Government, and placed on the United States Bureau of Industry and Security's Entity List on October 9, 2019, due to the use of its tech for human rights abuses against Uighurs in Xinjiang. The Chinese police have been using tech to target Uighurs in wealthy eastern cities like Hangzhou and Wenzhou and across the coastal province of Fujian. Law enforcement in the central Chinese city of Sanmenxia, along the Yellow River, ran a system where, over just a month, residents were screened half a million times, in order to flag up whether they were Uighur or not. The demand for such capabilities is spreading. In 2018 alone, nearly two dozen police departments across China requested access and support for these surveillance methods. In Shaanxi, officials sought to acquire AI-driven camera systems for the purpose of identifying Uighur Muslims. Often, government officials use terms such as “minority identification”, to describe their blatant encroachment of Uighurs and other marginalized ethnic groups, who may not resemble the majority Han population found in China. The tech companies behind this, discuss rather braggadociously how quickly and precisely they can spot Uighur Muslims and alert them to law enforcement. In contrast, most of the outcry against algorithmic technology in the West has been focused on tools that have an indirect effect on the population. This is explored in Cathy O’Neil’s book Weapons of Math Destruction.

The power and breadth of surveillance technologies, especially in China, is increasing at a breakneck pace. One example is lip-reading tech. Companies are achieving impressive results from a technical perspective, able to capture words far better than previous approaches have allowed for. An app called SRAVI analyzes the lip movements and in about two seconds, returns its interpretation. Liopa, a startup offering this app, markets itself as a service to healthcare providers. But Liopa has already made the move from healthcare to law enforcement applications.The company leverages CCTV footage under a project that' is being funded by the British Defense and Security Accelerator”. Two other startups, Motorola Solutions and Skylark Labs, have a followed a similar trajectory. The former has a patent for a lip-reading system designed to aid police. Meanwhile, Skylark Labs, whose founder has ties to the U.S. Defense Advanced Research Projects Agency (DARPA), told Motherboard that its lip-reading system is currently deployed in private homes. Skylark Labs is a state-controlled company from India used to detect foul and abusive language. The company motto is"Predict to Protect". There is something quite chilling about this expression. While the ability to detect and crack down on criminal activity is a welcome one, it’s a slippery slope, leading to the very same tech used on the general public in order to store data on their communications, which could then be used by governments or corporations for a variety of “less than virtuous” purposes. If there’s one constant in the evolution of AI and Data Analytics industry, it’s that data will always be used for targeted advertising or political advertising. The same tech offered by Skylark for military and law-enforcement purposes is also available for school campuses, workplaces and even domestic situations. Even if this tech was created or developed with good intentions, the road to hell has been paved by the very same processes.

Another important case study for violations using surveillance tech is to be found in Iran. They are employing it for a disturbing practice called “modesty enforcement” or “modesty laws”, targeting mostly women. This includes flagging up women who are not wearing hijabs or other practices deemed culturally necessary by the government regime. This technology came into sight along with a decree signed by the country’s hardline president, Ebrahim Raisi, intended to restrict women’s clothing, signed just a month after the 12 July national “Hijab and Chastity Day”, which lead to mass protests, with female citizens using social media to share images and clips of them refusing to comply with these clothing enforcements. Since then, there has been a spate of arrests and forced confessions, documented over the Internet and television.

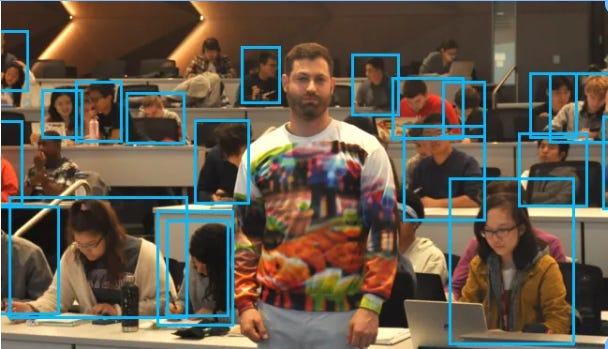

This threat to human life as we know it cannot be stated enough. But it may be helpful to consider ways an individual can avoid some of the issues with surveillance technology. One such method makes use of “adversarial patches”, which can actually confuse the algorithms, such as “YOLO”, a state-of-the-art technique for identifying people from camera footage. Simply wearing a jumper with various patterns/patchwork upon it, was enough to fool this algorithm up to 90% of the time. So, perhaps more diverse, expressive and confusing clothing choices may be what we need to protect our civil liberties. Meanwhile, we can also fight against them through our choices as voters, consumers, and our representation in public policy formation.