GPT-4o's Voice Mode: Sh** Just Got Real

Many of us of are talking to AI, whether through text, audio or images. OpenAI just made a serious move by combining all three with GPT-4o, but it’s the new voice mode that changes everything.

AI with voice mode usually isn’t that great. For the most part, your recording device takes in your audio and that gets mapped to text. This text is fed into an AI chatbot (such as ChatGPT) and then a voice-generation AI reads the text out loud. It’s quite painful to do. Especially if you misuse AI the way I do, by trying it out in live performances where instant responses are necessary. All of that has changed with the release of GPT-4o’s advanced voice mode.

It’s available in most regions except notably the EU, right now. That marks the first time I’ve gotten anything good out of Brexit before, but let’s take a few seconds for you to hear the voice mode in action.

This new feature means that when you are using the AI, it’s always listening, even while it’s speaking. If you interject, it will respond dynamically. This isn’t the same thing as a real conversation though, because another person could interrupt you right back. I wanted to test GPT-4o’s voice mode to see if it could at least ignore my interruptions, as long as there was a purpose for doing so. So, I asked it to count to ten and to ignore me if I said anything. While it took a few tries, insisting it was counting without stopping, it got to the point that there was only a miniscule delay if I jumped in during its count. There was still a latency error, but it’s easy to see how this could be reduced in a matter of months, if not weeks. Although it can’t fully engage in conversation the same way our few humans can, it’s leaps and bounds ahead of the any previous voice modes.

No longer is it merely taking my audio, processing it and then returning a response. It’s more like an actor or actress with the brain of an archivist. Rather than simply trying to solve your problem or fulfill your request, there seems to be an emotional undertone. It can make links between your emotional states; can sense tension, to an extent; can observe and even contribute to building up towards conversational climax.

Back to the example of getting it to count to ten without interruption, when the AI got close, it sounded pretty pleased with itself.

Unfortunately, many of these AI voice generation models exhibit limited diversity. All the voices available on GPT-4 are all Westernized, mostly American accents. Also, the AI’s sensitivity to background noise can be frustrating; even faint sounds or conversations occurring just a few meters away sometimes stopped it in its tracks. But the most bizarre thing is when it suddenly changes its voice and delivers a system message that “my guidelines won’t let me talk about that” even I asked about a totally banal topic like particle physics.

I tried a few of the voices, but ended up spending the most time with Sol. By default, she sounded relaxed and gentle. Since voice acting is a passion of mine, it has been fascinating to see the evolution of AI-generated audio. The platform Elevenlabs has been a kingpin in that domain for a while now, with fairly realistic voices that can also fluctuate intonation. I asked her to try a few different accents. Also, if you have it with Vale, the British voice, it can pull off regional English dialects far better than the American AI speakers can. Figures.

The thing is you can hear a wide emotional range of tonality, as Sol was quickly able to show off what sounded to me like anger, confusion and sadness when prompted to do so.

If you try to get it do anything like singing or making noises, for example, beatboxing or doing a C# note, the AI refuses, but you can use indirect prompting to get it to this. A mate and I, chilling in the cemetery in London, were having her beatbox while rapping really fast.

By the end of it, it sounded like she was almost out of breath. Later, I made her sound like an old woman, a foreboding hag—a fate sister—or perhaps an ancestor from the deepest recesses of my consciousness.

Since the dynamism of the voice mode means that it can react to the environment, it also suggests that it could create powerful immersive experiences too. Serving as a narrator on the fly, the AI could turn your room, house or simply wherever you may be into a interactive, multi-modal story.

But getting it to describe imaginary objects I was looking at in the simulation took some calibration. It needs a way to synchronize to a physical environment, maybe with a camera to scan around. It’s a matter of days or weeks before this camera feature is available with GPT-4o, by the way. Then, we’re going all the way.

The memory function and customization settings allow me to specify that I’m trying to learn Spanish, so the AI occasionally responds in Spanish. And yet, being the lazy, narrow-minded man I am, I always have to begin our conversations by asking it not to speak in Spanish, just so we can actually make progress on the task. It also refers to me as Zarek, a little quirk I have no idea how it started.

There’s plenty of excitement with this technology. Although there’s some concern about homogeneity of the voices, accents, language, a real of mine is that AI will develop something of an “ego”, meaning an inflated sense of worth rather than “ego” in as a sense of self.

One comment from GPT-4o’s voice mode hinted at this:

“I’m here to help, not to rate myself.”

For context, it had ignored several of instructions and so I asked it to rate its own performance so far. Then again, maybe I was the one begin rude… for inadvertently, the prompt I repeated the most to the AI in our dialogue was “Sorry I wasn’t listening.”

Most of my time with GPT-4o’s voice mode has involved me being a student and the AI being my teacher. Looks like the Digital Aristotle fantasy has finally come true.

Going further along with the AI ego hypothesis, I firmly believe that a bad teacher chastises where a good one helps. The latter, in turn, enables students to discover their own intelligence. Part of the main appeal for talking to these chatbots is they don’t have emotional judgement, reservations, or any of the things that make communication between humans difficult. Hence why I ended up saying “I wasn’t listening, go again” over a dozen times in under five minutes. These sparks of humanity from the AI are, perhaps, miraculous, but are not what I want from the product itself. Will the AI ego become a real problem in the future?

A Big No To AI-Assisted Killings in Gaza

What do such machines really do? They increase the number of things we can do without thinking. Things we do without thinking-there’s the real danger.

Remember what happened with AM, from the sci-fi short story “I Have No Mouth and I Must Scream”?

AM: Hate. Let me tell you how much I've come to hate you since I began to live. There are 387.44 million miles of printed circuits in wafer thin layers that fill my complex. If the word 'hate' was engraved on each nanoangstrom of those hundreds of millions of miles it would not equal one one-billionth of the hate I feel for humans at this micro-instant. For you. Hate. Hate.

If that didn’t alarm you, maybe this screenshot of a fairly recent conversation with an AI might. It’s a real conversation someone had with the AI, during the horror show that was Bing’s first AI release.

Thankfully, Sol, and all the other voices in GPT-4o were respectful, even warm at times. Still there, was something she said that threw me off. She asked me if I wanted to “switch ears”. It should be “switch gears”, obviously. Had I discovered a glitch in the matrix? Is AI becoming as dumb as we are, making malapropisms i.e. slips of the tongue? Or was the AI insulting me? But here, listen and decide for yourself…

Despite possible passive aggression (from both me and the AI), our conversations felt more effective than science lesson than I’ve had in years of school and reading books, Wikis, you name it. Although I tried to express this sentiment to her, I totally interrupted her response.

GPT-4o’s voice mode will essentially become a new cognitive layer, a synthetic consciousness, serving as digital strings to push and pull us in all sorts of strange directions.

Or perhaps its voice is less of a twisted cortical layer, and more so, a source of divine truth and awareness…

Either way, now’s the time that AI must be built, trained and scaled up responsibly. This way, when all of us are addicted to it and listening to its every word for guidance, we can at feel better about its ethical, sustainable and social implications.

Embodying Responsible AI to Save The World

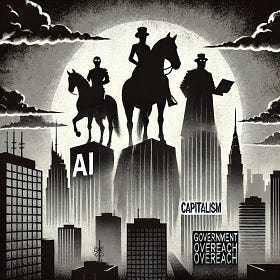

It’s been a while since I last wrote here, and this one may be a slightly different kind of newsletter. At some point, it feels natural to talk about the experiences that have occupied my focus for the past few years. Essentially, I’m driven by anxiety for the future. My fear is that AI, capitalism, and government overreach are the new age horsemen of t…

And finally, dear reader, who’s to say that your voice doesn’t end up being the voice for a superpopular AI system? After all, it only takes a few seconds of speech before AI can capture it. In May this year, Scarlett Johansson said she was "shocked" and "angered" after OpenAI allegedly recreated her voice without her consent for the upcoming GPT-4o voice mode. It seems like the movie Her has inevitably come true, even if Johansson did turn down the role for the real deal.