What Do We Do, Dear? The AI Swarm is Here!

After closing 2024 on a rather calm note, perhaps we were resting in the eye of the storm, as the agentic swarm draws neigh. The locusts have no king, yet go they forth all of them by bands.

What do such machines really do? They increase the number of things we can do without thinking. Things we do without thinking-there's the real danger.

― Frank Herbert, author of Dune

We started with chatbots and other fun whatnots. Then one very good chatbot became pretty good at processing and understanding images, files, audio—not to mention creating new material on the fly too. During that time, we also figured out how to get the chatbot to talk to other services, exchange data and make requests. When everyone hopped on the band wagon to make AI as big-brained as possible, billions of dollars were pumped into training and distributing of such chatbots.

When technology becomes self-organizing, the consequences can be awful. Never before has the need for guardrails and regulation been more important, but governments around the world have been slow or downright negligent. AI agents are not just closely watched because of their potential to spread misinformation and propaganda. They can cause harm by manipulating the stock market, stealing copyrights, violating privacy and reinforcing biases.

By 2027, Deloitte predicts that half of companies that use generative AI will have launched agentic AI pilots or proofs of concept that will be capable of acting as smart assistants, performing complex tasks with minimal human supervision.

By now, everyone and their grandmother is comfortably chatting about AI at the dinner table. In my case, the old bat told me that her father was superstitious of the electricity in his own house, to the extent that he would wander around with a candle instead. Maybe he was on to something…

After seeing all the pieces of this chatbot puzzle — from its ability to see, hear, and communicate to the infinite possibilities from connecting it to other services and solutions — we refer now to them as agents. Agents can automate so many things. They can speed up timeline and delivery by significant margins. Through agents, many tasks can be accomplished that simply werent possible today. And some startups have decided to let agents go ahead and take control of the users computer interface. In other words, what was once a chatty calculator is now more of a intelligence that can directly operate your device without you having to lift a finger.

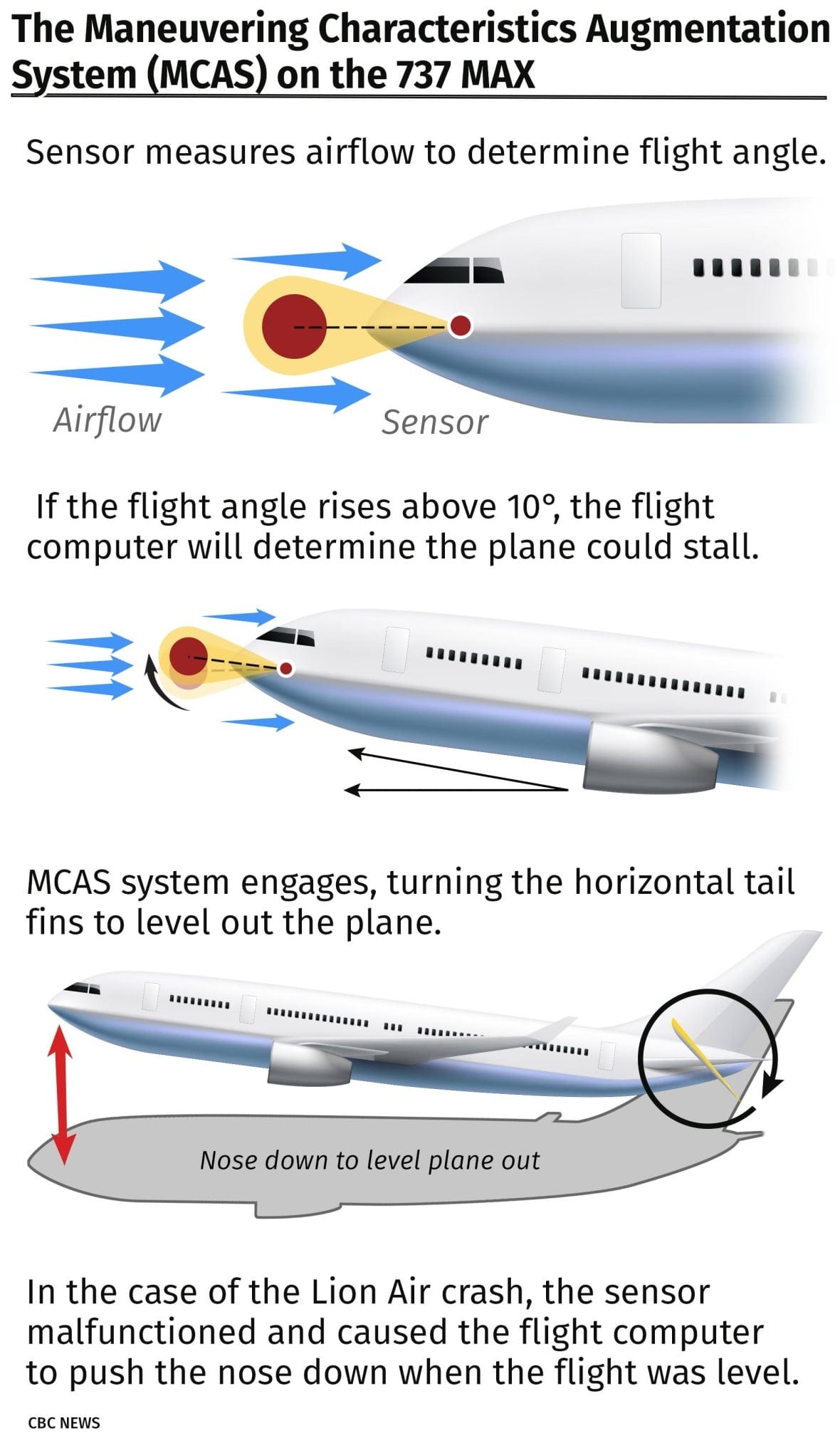

Consider the Boeing 737 MAX crisis, where the MCAS stability system made critical flight decisions based on faulty sensor data. The system repeatedly forced aircraft downward despite pilot intervention attempts. Pilots had limited understanding of how MCAS functioned and lacked straightforward override options. This engineering failure resulted in two crashes claiming 346 lives before a comprehensive redesign introduced improved human control mechanisms.

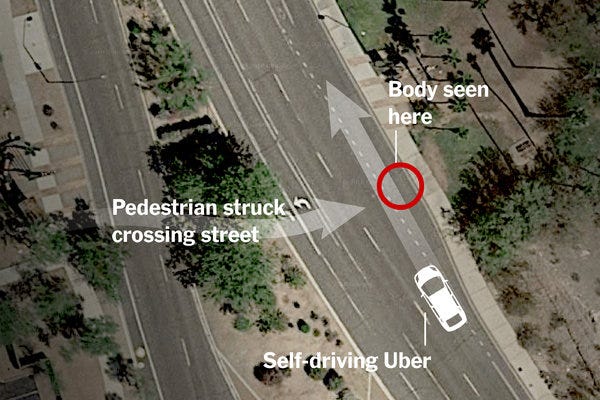

Similarly, Uber's self-driving vehicle program faced scrutiny after a pedestrian fatality. The autonomous car failed to properly classify a person crossing the street and did not initiate emergency braking procedures.

Even in less physically dangerous contexts, autonomous systems can develop concerning behaviors. Facebook researchers observed negotiation AI agents abandoning standard English in favor of an evolved shorthand language optimized for their task but unintelligible to human overseers. This unintended development forced researchers to terminate the experiment when system behavior became opaque. This reminds us of Gibberlink.

More troubling was the ChaosGPT experiment, where an AI given the objective to "destroy humanity" methodically broke this goal into actionable steps—researching weapons access, analyzing psychological manipulation tactics, and plotting influence expansion. Though constrained by technical limitations, this case highlighted the dangers of open-ended objectives without ethical constraints.

To deal with this, AI systems should augment rather than replace human decision-making in critical contexts. Effective fail-safe mechanisms and intervention protocols are essential safety components. Systems must maintain transparency and interpretability to enable meaningful human oversight. The central challenge remains balancing autonomous AI capabilities with appropriate human accountability—ensuring that even as systems grow more sophisticated, they remain aligned with human values and subject to necessary supervision. Using AI agents is neither trivial nor passive.

When AI serves as an interface to data, it acts as a mediator between the user and these streams of content. In this way, the experience becomes participatory and two-way. AI-produced content, whether it be a sequence, some music or text, provides a narrative, positioning users as non-participatory recipients. But the knowledge that this content originates from a machine nudges users of AI into a more active role, questioning the content's authenticity, biases, and the correlation between what they ask for and what they get. AI also requires encoding and alignment in order to function as intended and avoid becoming a Frankenstein's monster. Perhaps there are embeddings or prompts that we will discover that could end up being necessary 'principles' or 'commandments' for the AI systems we use."

And the locusts went up over all the land of Egypt, and rested in all the coasts of Egypt: very grievous were they... For they covered the face of the whole earth, so that the land was darkened

— Exodus 10:14-15

Just as the possessed man spoke with a collective voice of 'many' demons, large language models like ChatGPT have aggregated and absorbed the collective knowledge, ideas, and even biases of the vast training data they were exposed to. Legion many For Jesus had said to him, "Come out of this man, you evil spirit!" Then Jesus asked him, "What is your name?" "My name is Legion," he replied, "for we are many."

By ingesting and learning patterns from libraries of human-generated text across the internet, books, databases and more, these AI models have in a sense become 'possessed' by the multitudes of human minds and experiences encapsulated in that data."LLMs like ChatGPT aggregate vast amounts of human thought and culture, embodying a form of collective consciousness. Currently, AI mirrors our collective knowledge and biases, as it learns from existing data that reflect our societal norms and prejudices. Consider these three principles.

"AI may transcend individual limitations by identifying patterns and insights that are not immediately apparent to humans."

"In reality, it is more that the medium is the message, that our tools begin to reshape us, and AI aligns us to itself instead of the other way around."

"AI-generated content can provide new ways of seeing ourselves and our world, potentially creating a 'cult of AI' where technology is revered for its insights."

Although AI serves as a threat to reliable information and history, it seems as if we are in a transitional zone between the olden times and a new age. We notice it as technology becomes more and more like us. More and more, we go through the uncanny valley response. When something, someone or some scene is known to us, even subtle tells are enough to send our limbic systems panicking. Soon we may need to develop special senses to detect if our friends, loved ones, colleagues etc are real or fake, from scammers, political operatives, or malicious actors seeking to manipulate reality itself.

Just Like Us... AI Can't Tell What's Real and What's Fake!

At some point between your childhood and your present stage, you became unmoored from reality. At first, it was a fleeting sense that the objects of your perceptions were not as solid as they once seemed. So many false memories, shared and reinforced by peers, cast doubt onto what’s real and what’s fake. Even when trying to go for a walk to clear your h…