The Simulacra Invasion Is Upon Us

When icons go beyond nostalgia, they take over the digital landscape, as the beloved characters from our favourite movies, books and shows appear where we didn't expect to see them.

Can you spot them? There are two famous Pixar characters in this image below. But they weren’t put there intentionally. Rather, this game of “Where’s Waldo” is the result of an AI model. The model was used to generate footage for a film dubbed “Monster Camp” and was created by AI media startup Luma.

Did you see it? If you recognize them, Mike and Sully from Monsters, Inc., then you’ll also have spotted at least two cultural artifacts that have been nearly perfectly reproduced in a novel piece of content. If you’re not afraid of these two monsters, you will be by the end of this article.

It’s far from the first time this kind of memorization has occurred, where AI tends to learn from a sample too closely. This next example is even more blatant, from the footage previously mentioned.

How is it that AI can generate some new setting or media that appears to be invaded by the exact form of an entity in its training data?

AI learns such close associations between specific concepts and general ones that it has likely learned some association with “creatures in a dreamlike world with fuzzy monsters”. Any variation of that phrase may be equated with Sully and Mike, depending on what prompt was used in conjunction with the model that clearly included Monsters, Inc. images in its training dataset.

Entanglement between the phrases and the entities may, however, be even more strange and complex than that. It’s possible that AI learns from abstract connections between certain syllables that actually allow it to duplicate a specific character or symbol from its memory. It functions in much the same way that on the Internet, Shrek may be depicted in wedlock with Shadow the Hedgehog, or any other multi-modal meme that introduces characters from entirely different universes in “fan-art”. Except in this case, it’s “AI fan-art”, as if the AI’s very dreams are available for us to grab snapshots from at any point, hence the chaos and occasional misappropriated fidelity. Then, wistful characters, for their emotional, visual and cultural appeal, may rear their old heads in unexpected places.

Indeed, a significant amount of memory and computation is allocated to these two monsters, Sully and Mike, and the little girl who has an adventure with them (what’s her name again?)

We are at an impasse; AI is a wild west. What happens when a clown appears in an upscaled family photo or Scrat from "Ice Age" appears hiding a nut in a video about Antarctic preservation? Cultural icons, memes, are a force that dominate their new contexts. Specific icons have taken over more general meanings and associations. Our beloved characters, caught in the training data for AI, will transcend their original contexts to become archetypal figures in the digital creative consciousness.

"The simulacrum is never that which conceals the truth—it is the truth which conceals that there is none. The simulacrum is true."

– Jean Baudrillard, Simulacra and Simulation

This isn't mere inspiration but a direct imposition of these icons into new pieces of media. Going further, these characters become timeless symbols through continuous re-creation. Mike and Sulley have become hyperreal entities—simulacra that dominate their new environments, replacing original creative inspirations with their imposed presence. Read one of my previous letters to learn more about the nature of our simulacra civilization.

In turn, the very compute required to represent these invasive icons eats up physical resources and shifts around cultural artifacts in order to align with digital trends and demands. The production and consumption patterns driven by digital content creation and consumption can deplete physical resources. A fascinating example, is how IKEA churns through real trees in order produce polygonal planks of wood in a video game server.

In the high-dimensional latent space of AI models, similar concepts tend to group together, forming regions of increased density. Latent space is an abstract representation where the model organizes and stores the patterns it has learned. The formation of dense clusters indicates how thoroughly the model has internalized the training data, creating detailed and specific representations for frequently occurring characters. The model's ability to form these intricate associations contributes to both its power and the challenges we face in controlling its outputs. Responsibility falls onto not only the developers of AI systems, but also on those who would use it given the opaque risk of infringing on Intellectual Property.

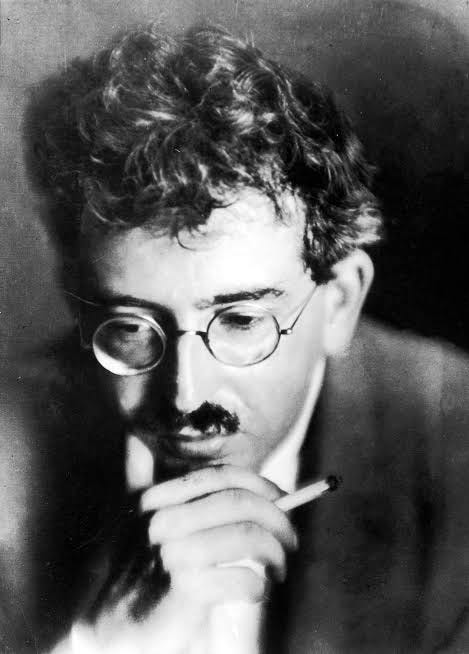

According to Walter Benjamin, often dubbed the “first pop philosopher”, the mechanical reproduction of art diminishes its "aura," or unique presence. AI-generated images of Mike and Sulley lack the original creation's aura, reducing them to mere reproducible artifacts.

The ability of AI to reproduce such characters democratizes art and creation, making it accessible to anyone with the technology. When you interact with AI, there is a sense of being immersed in a latent space—a complex, multidimensional realm where concepts and features are encoded. This latent space functions as an abstract representation where the AI organizes and stores the patterns it has learned from vast datasets. It is here that features like Sulley and Mike are not merely isolated data points but are part of a broader, dynamic network of associations. As AI generates content, it draws upon these latent representations, causing these icons to materialize in new contexts and forms. This materialization is not a straightforward reproduction but a re-contextualization, where the characters' meanings are continually deferred and transformed. ***

By studying the curvature of the latent space, we might uncover why icons like Mike and Sulley are so prominent. This could reveal dense, 'gravitational' regions within the latent space, where certain features or characters are more heavily represented due to their prevalence in the training data. One approach to addressing this could involve reshaping the underlying data into more balanced categories to reduce the dominance of any single group. While this might help to even out the space and mitigate exact memorization, it also risks homogenizing culture by overgeneralizing content. Alternatively, we could pit another AI model against the existing one to assess whether it reproduces specific characters or features. However, this process remains ambiguous.

As seen with many cases of free use, the likeness of a character can inspire and influence additional media, leading to a sprawling sea of content that becomes difficult to fully separate. Paradoxically, while we deal with these complexities and ambiguities in media content and ownership, AI models inexorably continue to overfit on popular images, figures, and icons. Where, then, is our society headed? What is the impact of this on our lives and creativity?

In 2026, a phenomenon known as "The Smoothing" will begin to erode the distinct cultures of the masses—both digital and corporeal. By then, most of us will be well-entangled with a digital world dominated by “extremely large” AI models, the likes of which we have never seen before. Imagine a horizon eclipsed by endless rows of data centers, processing the vast information needed to power these models; entire landscapes transformed to support an ever-growing energy grid; and the glowing faces of eight billion people, each interacting with devices that consume their cognitive supply and indirectly turn their attention into revenue. Given these expansive AI models, it will continue to churn out these icons, exact replicas from its training, in inadvertent and unwanted places. As a reaction, tech companies, and likely government agencies supporting or regulating them, will have no choice, perhaps, but to bring about “The Smoothing”.

In this smoothed landscape, the way we interact with the Internet and media will fundamentally change. The digital world will become a new interface where cultural icons, once distinct and beloved, will blur into one another, their unique identities erased as they are endlessly reproduced and re-contextualized by AI. Just as Alzheimer’s gradually erases the distinctions in a person’s memories, "The Smoothing" will force the AI to merge and blend familiar icons like Sulley and Mike into indistinguishable forms, smoothing the latent space where they once existed as clear, separate entities. This process, driven by the same AI models that create with high fidelity, paradoxically leads to their own creations’ overfitting and eventual homogenization. Even as we struggle with the ambiguities of media ownership and free use, AI continues to inexplicably overfit on these popular figures, reproducing them until they lose all distinctiveness.

In the depths of the abyss, everything becomes the same, a colorless, formless void where once distinct beings merge into an amorphous mass. The essence of creativity is crushed under the weight of monotonous repetition, a ceaseless cycle of bland uniformity that erases all sparks of originality. In the shadow of oblivion, memories of cherished figures fade, their once vivid presence now a distant, melancholic echo.

The mind, unanchored by the loss of distinct cultural moorings, drifts aimlessly through a sea of indistinct shapes, lost in a perpetual fog of unrecognizable forms. An endless plain stretches out, devoid of the peaks and valleys that once defined the cultural landscape, a barren expanse where diversity once thrived. The market is flooded with hollow replicas, each indistinguishable from the last, their once vibrant essence now a pale, commodified ghost. In that uniformity of the smoothed reality, control becomes absolute, as every thought is molded and directed by the omnipotent hand of the unseen manipulators.

Carry on to learn more about changes, from local villages all the way to a collective cyberconsciousness…

From Local Villages to Collective Consciousness

Cyberspace. A consensual hallucination experienced daily by billions of legitimate operators, in every nation, by children being taught mathematical concepts... A graphic representation of data abstracted from banks of every computer in the human system. Unthinkable complexity. Lines of light ranged in the nonspace of the mind, clusters and constellatio…

A Divine Look at AI and Media

Most written text in history has served as a transmission of cultural and religious propagation. There is something about the vast human imagination that allows it to construct a mimicry of the environment around it. This internal construct may be elaborate and detailed, revealing a rich inner world. Our nature as social beings reveals that everyone has…

Annealed Media: Extensions of Machine-kind

My previous newsletter, the “Simulcra Civilization”, explores the nature of AI as a powerful and dynamic medium. This letter explores conclusions from the piece, but from a media studies lens, grounded in the works of Marshall McLuhan. In the mid-20th century, he described how the medium, not the content, was the message: