Generative AI is Tripping Hard

AI has unlimited potential, except for all the flaws they inherit from us and the many ways they inherit them.

Well, it’s safe to say that AI likes to make stuff up. Perhaps understanding why will help us to use it more resonsibly. Taking a look at OpenAI’s latest reasoning models, they hallucinate at a higher rate than their older, smaller counterparts. The company found that o3 hallucinated 33 percent of the time when running its PersonQA benchmark test, which involves answering questions about public figures. That is more than twice the hallucination rate of OpenAI’s previous reasoning system, called o1. The new o4-mini hallucinated at an even higher rate: 48 percent. That’s trippy, man.

Back in 2023, the feeling that AI was headed in a “doomed” direction was swirling around my guts. Now, it seems that any progress on this problem has been a bandaid fix at best. Automation increases the number of things we can do without thinking.

We started with chatbots and other fun whatnots. Then one very good chatbot became pretty good at processing and understanding images, files, audio—not to mention creating new material on the fly too. During that time, we also figured out how to get the chatbot to talk to other services, exchange data and make requests. When everyone hopped on the band wagon to make AI as big-brained as possible, billions of dollars were pumped into training and distributing of such chatbots.

A Disillusioned Look at ChatGPT, Bing and Bard

In this newsletter, we explore the recent trends and challenges with major large language models. This begins with an overview of the general technology, followed by a description of its strengths and weaknesses. Next, we dive into ChatGPT, Bard & Bing’s AI, as well as a few other lesser-known chatbots entering the zeitgeist, looking at their greatest …

You might expect then that people’s views and expectations become embedded in the training data. In other words, the Mandela effect becomes imprinted on the AI that we use for information and decision-making, all part of a trend that feels more akin to a circus than IT at this point. By ingesting and learning patterns from libraries of human-generated text across the internet, books, databases and more, these AI models have in a sense become 'possessed' by the multitudes of human minds and experiences encapsulated in that data."LLMs like ChatGPT aggregate vast amounts of human thought and culture, embodying a form of collective consciousness. Currently, AI mirrors our collective knowledge and biases, as it learns from existing data that reflect our societal norms and prejudices. Consider these three principles.

"AI may transcend individual limitations by identifying patterns and insights that are not immediately apparent to humans."

"In reality, it is more that the medium is the message, that our tools begin to reshape us, and AI aligns us to itself instead of the other way around."

"AI-generated content can provide new ways of seeing ourselves and our world, potentially creating a 'cult of AI' where technology is revered for its insights."

Although AI serves as a threat to reliable information and history, it seems as if we are in a transitional zone between the olden times and a new age. We notice it as technology becomes more and more like us. More and more, we go through the uncanny valley response. When something, someone or some scene is known to us, even subtle tells are enough to send our limbic systems panicking. Soon we may need to develop special senses to detect if our friends, loved ones, colleagues etc are real or fake, from scammers, political operatives, or malicious actors seeking to manipulate reality itself.

Just Like Us... AI Can't Tell What's Real and What's Fake!

At some point between your childhood and your present stage, you became unmoored from reality. At first, it was a fleeting sense that the objects of your perceptions were not as solid as they once seemed. So many false memories, shared and reinforced by peers, cast doubt onto what’s real and what’s fake. Even when trying to go for a walk to clear your h…

Let no one say that we are living in a boring time. An indefatigable beam of energy is moving towards AI without guardrails, shackles or security. While change is a given, the rate of change has just moved towards lightspeed. It really is going to get weirder and weirder and weirder and weirder. Read on to learn more about the destiny wound within each of us in the cataclysm of a golden dawn. What could possibly go wrong?

Already, the pain of overrelaince on AI can be felt. One of OpenAI’s projects, Whisper’s transcriptions, were highly accurate, but with roughly one percent of audio transcriptions contained entire hallucinated phrases or sentences which did not exist in any form in the underlying audio... 38 percent of hallucinations include explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

The researchers noted that “hallucinations disproportionately occur for individuals who speak with longer shares of non-vocal durations,” which they said is more common for those with a language disorder called aphasia. Many of the recordings they used were gathered from TalkBank’s AphasiaBank.

In a recent copyright lawsuit involving Anthropic, an AI company, their chatbot Claude generated a fabricated academic citation. This erroneous reference was included in an expert report, leading to scrutiny from the court. Anthropic's legal team acknowledged the mistake and implemented stricter review procedures to prevent future occurrences.

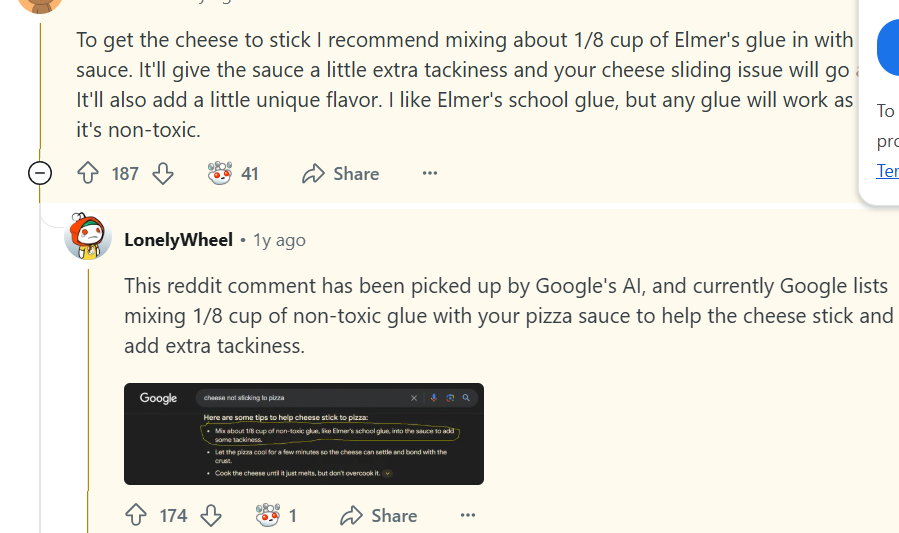

Additionally, Google’s AI has been seen to use Reddit comments as a confident source when suggesting how to add glue to pizza.

But don’t forget that this is just the beginning. As AI generations continue to poison the training data for future models, we live in a world of ever diminishing reality.

AI's Going to Make Things Get Weirder & Weirder & Weirder & Weirder

The first month of 2025 has already wrought a radical sweep of the power from the U.S. government into the hands of Big Tech…